Human beings are likely to "hand over" their lives to autonomous driving in the future, but few people know that many companies are entrusting the task of realizing this "life-threatening" technology to a bunch of "game-playing" engineers.

This is not a joke.

"There are even people in the industry using the GTA 5 game engine to do research and development related to autonomous driving." A person engaged in research and development of autonomous driving technology said to Pinwan . GTA 5 is a very popular open world adventure video game. The content involves violence, gangsters, gun battles, etc. Of course, it also includes grabbing a walking car and then rampaging in the virtual world.

For the first time, you can hardly imagine the relationship between such a "crude" game and "safety first" autonomous driving.

In fact, what engineers are after is the role of GTA 5 as a ready-made "simulation platform".

The so-called simulation platform test is simply to test the autonomous driving in a virtual world that simulates the real road, so as to improve the code of automatic driving faster and more cost-effectively.

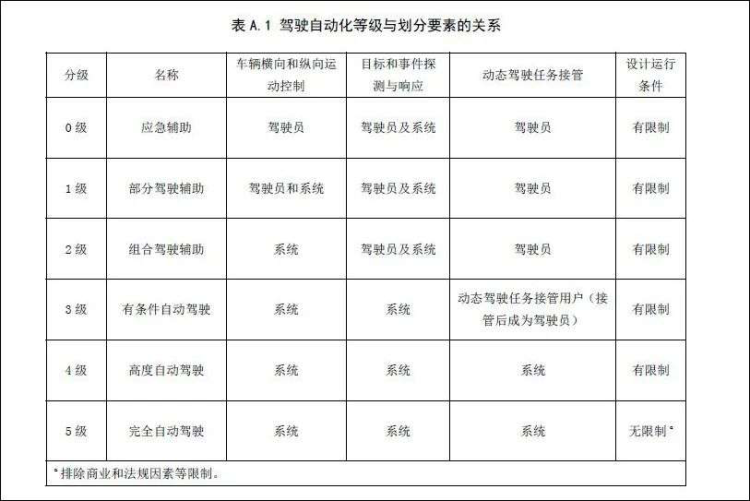

To achieve a broad sense of automatic driving, the difficulty is no less than the realization of strong artificial intelligence. Although the field of autonomous driving still seems to be very hot, today, companies are still a long way from reaching the L4 level of commercial landing and making profits. Among them, safety reasons are one of the most important influencing factors.

In March 2018, Uber's self-driving car crashed with a pedestrian, which directly caused its road test permit to be revoked, which further caused regulatory agencies in various countries to treat the test vehicle on the road more strictly. But on the other hand, a large amount of real vehicle scene data is one of the important conditions for the continuous "evolution" of autonomous driving. In 2016, the RAND Think Tank pointed out that an autonomous driving system needs to test 11 billion miles to meet the conditions for mass production applications. This means that even a self-driving fleet with 100 test vehicles will take about 500 years to test at an average speed of 25 miles (40 kilometers) per hour for 7×24 hours. So a paradox appears: the regulator believes that the car must be safe enough to get on the road, but in terms of technical realization, autonomous driving must rely on more on the road to collect more real data and become safer.

Therefore, practitioners have set their sights on the simulation platform one after another.

Similar to the actual road test, the simulation test of autonomous driving also needs to absorb a large amount of scene data to accelerate the iteration of the algorithm. Judging from the published test data , Waymo, which was the first to be involved in autonomous driving technology research, has been formally established in 2009. As of January 2020, it has measured 20 million miles of roads and 10 billion miles of virtual simulation tests. These are completely two. Magnitude.

The simulation test of autonomous driving also reduces the R&D and operating costs of enterprises. 20 million miles and 11 billion miles are still not a small distance. If you want to use real-vehicle testing, almost no company can afford the time cost and capital cost. Waymo burned at a rate of US$1 billion a year when working on its Robotaxi (self-driving taxi) project, and the installation cost of lidar alone reached US$75,000. Studies have shown that the large-scale intelligent simulation system greatly reduces the test cost of the actual vehicle, and its cost is only 1% of the cost of the road test, and it can also expand the mileage of thousands of times the actual road test.

Since the occurrence of the Uber incident, countries have become vigilant about the conduct of autonomous driving road tests by various companies, and the management of actual vehicle tests on public roads has become more and more stringent. Test sites and designated roads issue test licenses to applicants. Due to the limited volume of road sites, companies often queue up. Therefore, various objective restrictions have slowed down the accumulation of data in real-vehicle testing methods.

At the same time, the fixed places and designated roads also make the scenes in the actual vehicle test relatively limited, which cannot meet the test requirements of various special road conditions, in other words, the needs of the long tail scene. People usually understand the long-tail scene as all sudden, low-probability, and unpredictable scenes, such as intersections with faulty traffic lights, drunk vehicles, extreme weather, and so on. On the simulation platform, in order to exhaust the various scenarios that the autonomous driving system may encounter and ensure the safety and reliability of the system, practitioners need to do more simulations and tests on the long tail scenario.

Through the above description, the industry has a lot of demand for simulation testing of autonomous driving. So what exactly is simulation testing of autonomous driving?

The team of Professor Henry Liu from the Intelligent and Connected Transportation Research Center of the University of Michigan once told Pinwan: “In simple terms, the simulation test is like building a game based on the real world, allowing an autonomous car in this virtual world. Keep running.” You can even think that the data of players driving in GTA 5 can be used for testing and utilization to some extent.

The actual situation is also true, and it has become the choice of many companies to use game engines Unity, Unreal, and UE4 as an autonomous driving virtual simulation platform. Autonomous driving simulation platforms developed based on UE4 include open source AirSim, Carla and Tencent 's TAD Sim. TAD Sim, a simulation platform developed by Tencent, uses its own technology accumulation in the game field, and uses in-game scene restoration, 3D reconstruction, physics engine, MMO synchronization, Agent AI and other technologies to improve the reproducibility and efficiency of automated driving simulation platform testing. . Baidu 's Apollo platform chose to cooperate with Unity to build its full-stack open source autonomous driving software platform.

Autopilot manufacturers choose to use game engines as the simulation platform. The main reason is that it can produce a full-stack closed-loop simulation, especially in the simulation of the perception module. It can reconstruct a three-dimensional environment and simulate the camera in the three-dimensional environment. Various input signals such as lidar.

Simulation is equivalent to the construction of the real world. In the training of the perception algorithm, the simulation system comes with the true value of the scene elements, and automatically generates various weather and road conditions without labeling to ensure coverage. The truth value is the objective attribute and objective value of all items. Usually, what the human eye or the autopilot sensor sees is the observation value, and the truth value is the absolute objective attribute of an object that is not transferred by any observer's observation result. All elements are generated by the simulation system itself, so it has the objective value of all the elements in the scene, without the observer's observation, and the self-labeling required by the perception system is directly carried out through the truth value of the simulation system.

Generally, the perception training of traditional algorithms requires manual labeling. For example, a little girl will draw a frame to label when riding a bicycle. The labor cost of third-party labeling needs 1 billion US dollars each year. Waymo uses a large number of virtual tests to complete algorithm development and regression. test.

When the engineer adjusts the algorithm, it may only take a few minutes to test on the simulation test platform, but if it is a road test, it may take half a day or a day to make an appointment for the adjustment of the autonomous driving fleet, and choose to test on the simulation platform. As long as the computing power permits, high-concurrency tests such as 1000 vehicles and 2000 vehicles can be carried out at the same time.

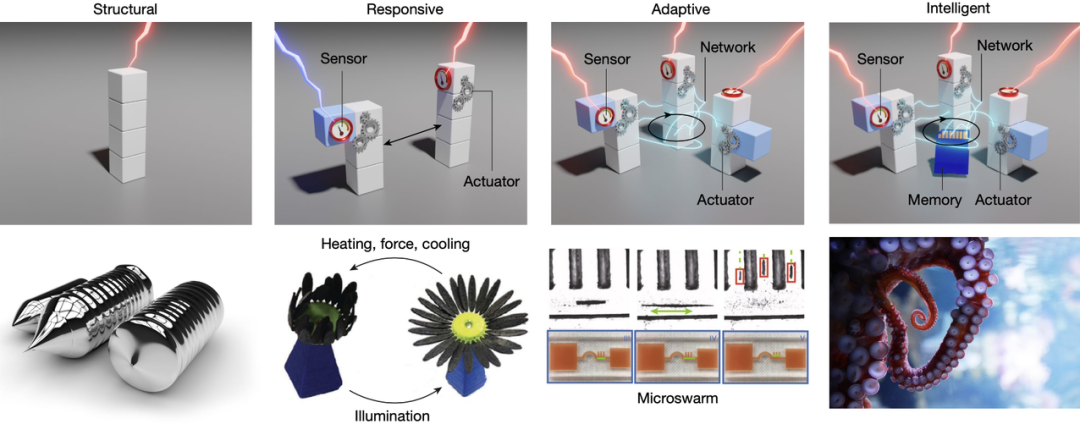

In summary, the core capabilities of automated driving simulation testing include geometric restoration of scenes, 3D scene simulation + sensor simulation; restoration of scene logic, decision planning simulation; physical restoration of scenes, control + vehicle dynamics simulation; high concurrency , Cloud simulation and other advantages.

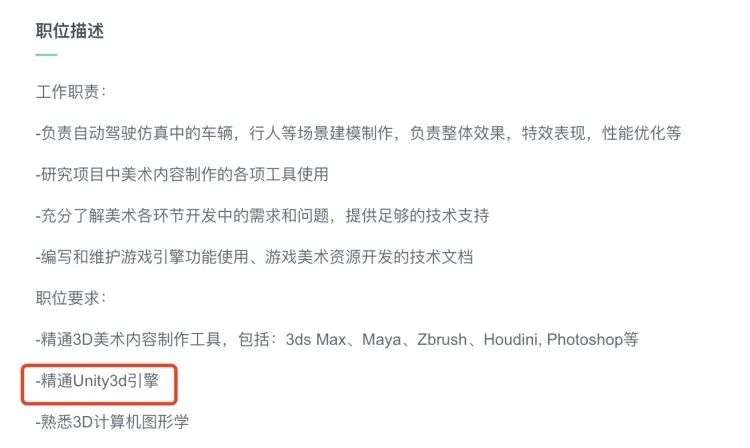

Therefore, new and old autopilot companies are recruiting compound talents with virtual engine backgrounds such as Unity and Unreal. An industry person said to Pinwan: “One is that the simulation environment needs to be rendered more and more realistic, and the other is that these talents can make some optimizations based on the virtual engine to reduce the cost of the entire simulation test.”

Recruitment requirements for simulation engineers of an autonomous driving company

Autonomous driving companies are also looking for ways to improve the efficiency of simulation tests. Improving the fidelity of virtual scenes is usually considered a reasonable way, but it is not that easy.

Professor Henry Liu said: "Because this environment is constructed based on a mathematical model, if we want the calculation results to be closer to the real world, then the construction of this model will be more complicated and the calculation speed will be slower." Create a highly realistic virtual The difficulty of the world may not be less than the realization of autonomous driving. Simulation testing is not complete United States , and the results have certain limitations, it does not help companies solve all the problems autopilot. Although Waymo's 20 million miles of autonomous driving in 2020 and more than 15 billion miles of autonomous driving simulation mileage in 2020 can kill most of the latecomers in the autonomous driving industry, 20 million miles are a drop in the price of achieving autonomous driving, and data is not omnipotent, and there is no evidence. It shows that autonomous driving simulation can fully simulate the complex situation of the real world.

At present, all data-driven methods will always fail scenarios . First, the uncertainty of the data itself. For example, for many occluded objects, even human labels will have a lot of uncertainty; secondly, due to the high complexity of the model, it is difficult to identify all models in the virtual engine, especially in Europe and the United States. Scene.

Different from the human eye, it is much more difficult for the algorithm to recognize the image. Once a few key elements in the image undergo subtle changes, the algorithm’s output recognition results may have huge differences, not to mention the human eyes. When misjudged. Therefore, massive data is not a sufficient condition to realize L4 or even L5 autonomous driving. It cannot be expected to prove the absolute safety of offline roads through hundreds of millions of kilometers of "safe driving" on the virtual simulation platform.

A professional said to Pinwan: “Although the use of game engines for simulation testing has solved some of the problems of autonomous driving to a certain extent, in the final analysis, its focus is still on testing. Its main purpose is to prevent the possibility of autonomous driving algorithms. Make mistakes on some mistakes that have already been made, or test them in advance in scenarios that engineers can think of. It is more to ensure the correctness of the logic of the entire system."

This article is from the WeChat public account "Pinwancool" (ID: pinwancool) , author: Hong Yuhan , 36氪 published with authorization.

The opinions of this article represent only the author himself, and the 36氪 platform only provides information storage space services.