In recent years, friends who often follow the technology circle have always found that every time a manufacturer makes a major release, they can always see concepts such as "subversion", "extreme", and "revolutionary" appearing at the press conference.

A few days ago, at the launch site of the iPhone 12 , Tim Cook used the word " new era ". To be precise, the iPhone should officially enter a new era in the 5G era. However, domestic consumers are no strangers to 5G. Since Apple's epoch-making products did not meet market expectations, the stock price fell to a small target of 380 billion that day. Later, it will rely on sales to prove whether Apple has entered a "new era".

Compared with the high-profile consumer electronics field, this article will focus on the data center industry that most people are not familiar with, and its more upstream data center computing chips. Because we see that with the large-scale popularity of cloud computing and the exponential growth of AI computing, data centers have been mentioned to an unprecedentedly important position.

I recently participated in a forum on the digital communications industry and heard the opinion of an expert from the China Academy of Information and Communications Technology: data centers will become the next commanding heights of digital technology alongside 5G technology. A similar point of view, we also heard from Huang Renxun at the 2020 GPU Technology Conference on NVIDIA: The data center has become a new computing unit.

The reason why Huang Renxun has such confidence lies in the launch of a new processor DPU at this press conference, as well as the software ecological architecture DOCA surrounding the processor. According to Nvidia's introduction, DPU can be combined with CPU and GPU to form a fully programmable single AI computing unit to achieve unprecedented security and computing power support.

So, can DPU truly assume the same importance of computing as CPU and GPU, and realize a huge innovation in the data center? Where is the point of innovation? These are still issues that we need to review and investigate.

Nvidia's DPU's “core”

From the introduction of NVIDIA in GTC, DPU (Data Processing Unit) processor is actually a SoC chip, which integrates the functions of ARM processor core, VLIW vector computing engine and smart network card, and is mainly used in distributed storage, The field of network computing and network security.

The main function of DPU is to replace the CPU processor resources originally used to process distributed storage and network communications in the data center. Before DPU, SmartNIC was gradually replacing CPU in network security and network interconnection protocol. The emergence of DPU is equivalent to an upgraded alternative version of the smart network card. On the one hand, it enhances the processing capacity of the smart network card for network security and network protocols, and on the other hand, it integrates and strengthens the processing capacity of distributed storage. The domain better replaces the CPU, thereby freeing up the computing power of the CPU for more applications.

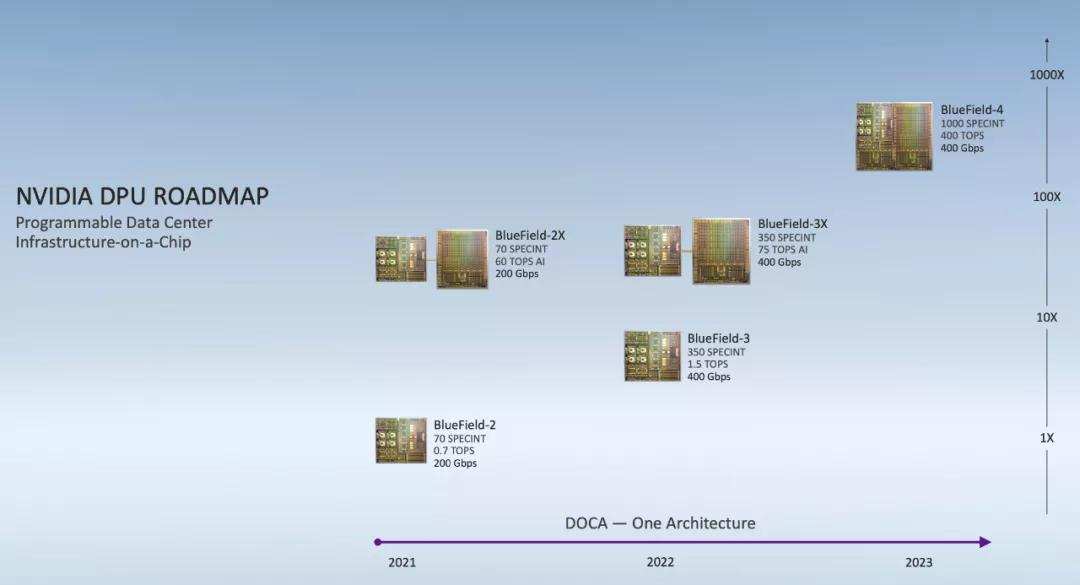

Nvidia's technological breakthrough in DPU comes from the acquisition of Israeli chip manufacturing company Mellanox last year and the development of two DPUs in the BlueFeild series based on the company's hardware-Nvidia BlueField-2 DPU and BlueField-2X DPU.

According to reports, BlueField-2 DPU has all the features of Nvidia Mellanox Connext-6 SmartNIC. Together with eight 64-bit A72ARM processor cores, it can be fully programmed and can provide a data transfer rate of 200 gigabits per second. Accelerate security, networking, and storage tasks in critical data centers.

The core point is that a single BlueField-2 DPU can provide data center services equivalent to consuming 125 CPU cores, thereby effectively releasing the computing power resources of the CPU cores.

The BlueField-2X DPU has all the key features including the BlueField-2 DPU, and its features can be enhanced by the AI function of the NVIDIA Ampere GPU. In NVIDIA's roadmap, the future Bluefield-4 will introduce CUDA and NVIDIA AI to greatly accelerate the processing speed of computer vision applications in the network.

Another noteworthy thing is that NVIDIA proposed a software development kit with DPU processor-DOCA (Data-Center-Infrastructure-On-A-Chip Architecture). NVIDIA experts compare DOCA to CUDA in the field of data center servers, and its intention is to help developers build corresponding applications on DPU-accelerated data center infrastructure, thereby enriching the application development ecosystem of DPU.

From the above introduction, we can see the two ambitions of Nvidia. One is that DPU is trying to copy "GPU instead of display accelerator card to become the path of general display chip", and the other is DOCA is trying to copy "CUDA's role in the process of generalization of GPU. Played an ecological role."

If combined with the news that Nvidia acquired ARM not long ago, we see that an important consideration for Nvidia is to use the ARM architecture CPU as the core, and accelerate the expansion of server applications to all application scenarios of servers, thus realizing in the field of data center servers For greater breakthroughs, the goal is naturally to point to the X86 server ecosystem represented by Intel CPUs.

Before examining the possibility of DPU challenging CPU dominance, we can simply understand the layout of NVIDIA in the data center.

Nvidia's data center "ambition"

After experiencing the slowdown in the growth rate of the game graphics business and the significant decline in performance after the decline in cryptocurrency, Nvidia, which has experienced many twists and turns, finally firmly bet on the industrial layout of AI computing and data centers.

In 2017, Nvidia’s data center business quarterly revenue exceeded $500 million for the first time, a year-on-year increase of 109%, which made Huang Renxun strongly affirm the value of the data center business at a conference.

As early as 2008, Nvidia initially used the earliest Tesla GPU accelerator and the primary CUDA programming environment to perform GPU computing for the data center, trying to offload more parallel computing from the CPU to the GPU. This has become a long-term strategy for the evolution of NVIDIA GPUs.

Since then, with the explosive growth of AI computing requirements in data centers, AI hardware is becoming the key to the expansion and construction of more and more data centers. When super AI computing power becomes a rigid demand for data centers, NVIDIA GPUs break through the computing power bottleneck of deep learning with powerful parallel computing and floating-point capabilities, and become the first choice for AI hardware. This opportunity allows Nvidia to gain a firm foothold on the hardware layout of the data center. Of course, Nvidia's ambitions go far beyond that.

Nvidia’s main layout is in March 2019. It spent US$6.9 billion to acquire Mellanox, an Israeli chip company, and this company is good at providing servers, storage and hyper-converged infrastructure including Ethernet switches, chips and InfiniBand A large number of data center products including intelligent interconnection solutions. The combination of Nvidia's GPU and Mellanox's interconnection technology can enable data center workloads to be optimized across the entire computing, network and storage stack, and to achieve higher performance, higher utilization, and lower operating costs. .

At that time, Huang Renxun regarded Mellanox's technology as the company's "X factor", that is, transforming the data center into a large-scale processor architecture that can solve high-performance computing requirements. And now that we see the emergence of DPU, it is an attempt to have the prototype of this architecture.

This year, Nvidia spent 40 billion U.S. dollars to acquire the semiconductor design company ARM from SoftBank. One of its intentions is to apply the ARM architecture CPU design to the future computing model that Nvidia will build. The main layout areas include supercomputing, Autonomous driving and edge computing mode. Among them, the combination of Nvidia's GPU-based AI computing platform and ARM's ecosystem will not only strengthen Nvidia's high-performance computing (HPC) technical capabilities, but also drive Nvidia's data center business revenue to continue to increase.

It can be said that Nvidia’s success in the data center field is related to whether it can achieve large-scale computing in the data center. From the development of self-developed DGX series servers to the integration of Mellanox technology, to the development of a new data center with the help of the ARM ecosystem. Computing architecture is a preparation for transforming data center business.

Of course, if you want to achieve this goal, it depends on whether Intel agrees.

Nvidia challenges Intel, how far is it?

At present, about 95% of GPUs in data centers are still connected to x86 CPUs. If Nvidia is only doing GPU increments, it still cannot shake Intel's dominance in data center servers. Now, Nvidia is obviously not satisfied with seizing the incremental market, but rather hopes to cut into the stock market of the data center, that is, try to replace the Intel (and AMD)-led X86 CPU with its own chip products.

Since NVIDIA started acquiring ARM, the outside world can see that NVIDIA has repeatedly shown its determination to use ARM processors to further occupy the data center server market, and the DPU integrated with the ARM core will become its entry into the data center inventory market to replace X86 CPUs. The first entry point.

Nvidia launched DPU to enter this market, instead of directly using ARM core CPUs to directly compete with X86 CPUs. In fact, it is a more convenient approach, which is equivalent to using next-generation CPU products that integrate network, storage, security and other tasks. The purpose of gradually replacing the CPU, even if the embedded ARM CPU performance cannot match the X86 CPU of the same generation, the overall performance must exceed the X86 CPU due to the integration of a dedicated processing acceleration module on the DPU SoC. This kind of "Tian Ji horse racing" strategy is likely to be the beginning of NVIDIA's replacement of low-end X86 CPUs.

But Nvidia wants to challenge Intel in the mid-to-high-end processor market, and it has to face a series of difficulties.

First of all, it is NVIDIA's GPU and X86 CPU that have formed a very stable and strong complementary relationship. Nvidia wants to use processors based on the ARM architecture to make high-end servers, and it also needs a significant improvement in the performance of the ARM processors. Now, this process is not clear.

The other is that Intel has already responded to the various challenges of Nvidia. As early as 2017, Intel announced that it would develop a full-stack GPU product portfolio, and next year Intel’s first GPUs are expected to be released in various markets using GPUs.

In order to block Nvidia's expansion in the field of AI computing and autonomous driving, Intel has also acquired Nervana and Movidius as the layout of edge AI computing, and Mobileye as the layout of autonomous driving. Moreover, Intel also announced in 2018 that it will develop a full-stack open software ecosystem OpenAPI plan for heterogeneous computing to respond to the expansion of the CUDA ecosystem. In other words, Intel is not only doing things in Nvidia's backyard , but also building its own X86 server ecosystem.

For Intel, the data center business is also becoming its core business component. In Q4 of 2019, Intel’s data center business surpassed the PC business and became its main source of revenue; and this year, Intel’s reorganization of its technical organization and executive team was also seen as the beginning of a comprehensive transformation of the data center business.

It is conceivable that in the future data center processor business, Nvidia will usher in Intel's strongest defense and counterattack, and the majority of server integrators will become the beneficiaries of this competition.

After the mantis catches the cicada and the oriole, NVIDIA has to face the pursuit of its new rival, ADM. Not long ago, ADM revealed that it would spend 30 billion U.S. dollars to acquire Xilinx, but it was cut as a rival to Intel and blocked Nvidia's dual strategy.

In addition, NVIDIA also faces challenges from customers' self-developed chips in the data center processor business. Cloud service providers themselves are not willing to completely hand over their computing cores to Nvidia. Whether it is AWS, Google, Alibaba , and Huawei, they are already deploying their own cloud processors.

In any case, the data center has become the main battlefield for the veteran chip giants such as Intel, Nvidia, and AMD in the future. How can Nvidia find a way to cut into the high-end server processor in the self-developed route of X86 and cloud computing customers? The key point, the DPU just released can only be regarded as a preliminary attempt.

In the future, the data center game will be fully developed around AI, supercomputing and other fields. NVIDIA has strong enemies in the front and chasing soldiers in the back. With allies supporting themselves, the journey of its data center still has a long way to go.

No comments:

Post a Comment